A New Chapter

Professional Experience

Data Mechanics Graduate Teaching Fellowship (Fall 2024)

Data Mechanics Graduate Teaching Fellowship (Fall 2024)

Data Mechanics Graduate Teaching Fellowship (Fall 2024)

Led labs based on data flows and computational workflows in large systems, guided students through the data science lifecycle, from question formulation to decision-making; emphasized on SQL and NoSQL paradigms for building scalable data systems; covered statistical inference, prediction, and algorithm development.

Data Science Internship (Summer 2024)

Data Mechanics Graduate Teaching Fellowship (Fall 2024)

Data Mechanics Graduate Teaching Fellowship (Fall 2024)

Delivered Ad hoc reports based on the demographics of a 30-mile radius around a location to understand the opportunity zone; scraped actionable data using BeautifulSoup and Selenium(Dynamic) such as family income, median age and occupation of competitors locations to build a dataset and mined(machine learning classifier) it post pre-proc

Delivered Ad hoc reports based on the demographics of a 30-mile radius around a location to understand the opportunity zone; scraped actionable data using BeautifulSoup and Selenium(Dynamic) such as family income, median age and occupation of competitors locations to build a dataset and mined(machine learning classifier) it post pre-processing to generate similarity score reports to analyze and deliver results that supported successful M&A decisions. and NoSQL paradigms for building scalable data systems; covered statistical inference, prediction, and algorithm development.

Data Science Graduate Teaching Fellowship (Spring 2024)

Data Mechanics Graduate Teaching Fellowship (Fall 2024)

Data Science Graduate Teaching Fellowship (Spring 2024)

For the Spring Semester of 2024 I have been selected as the Teaching assistant for the course Foundations of Data Science in the Centre for Computing and Data Science (CDS) at Boston University. I would be facilitating weekly Linear Algebra discussion sessions to promote understanding of vector space, independence, orthogonality, and matrix factorizations while clearing doubts if they have any.

Technical Projects

Python for Data Processing & Automation in Data Warehousing (Fall 2024)

Developed Python scripts in Jupyter Notebook to process and analyze structured datasets, including random number generation, date manipulations, and file handling.

Integrated Python with SQL databases, automating data extraction, transformation, and cleansing for analytical processing in a data warehouse environment.

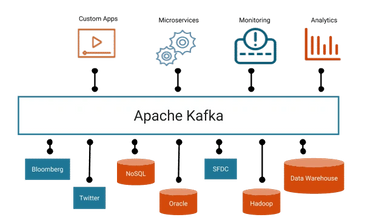

Apache Kafka for Real-Time Data Streaming (Fall 2024)

Implemented a real-time data streaming pipeline using Apache Kafka and Confluent Cloud, configuring producers, topics, and consumers to process live event data.

Configured Kafka Topics, Brokers, & Partitions to build a distributed event-driven system, optimizing data flow, scalability, and fault tolerance for real-time processing.

Semi-Structured Data Processing & Visualization with Apache Spark (Fall 2024)

Hospital Database Optimization, Appointment Automation & Audit System (Fall 2024)

Processed and analyzed 5GB of semi-structured JSON data using Apache Spark, leveraging PySpark DataFrames, SQL functions, and aggregation techniques for efficient data transformation.

Created 10+ bar charts, pie charts, and summary tables to visualize insights, identifying a 35% higher distribution of outdoor activities compared to indoor activities.

Hospital Database Optimization, Appointment Automation & Audit System (Fall 2024)

Hospital Database Optimization, Appointment Automation & Audit System (Fall 2024)

Designed relational database schema with key, constraints and normalization, optimizing hospital data management and query efficiency. Implemented complex SQL queries using joins, subqueries, aggregations (GROUP BY, HAVING), and OLAP functions (ROLLUP, CUBE, RANK) to extract and analyze hospital data.

Optimized relational database schema

Designed relational database schema with key, constraints and normalization, optimizing hospital data management and query efficiency. Implemented complex SQL queries using joins, subqueries, aggregations (GROUP BY, HAVING), and OLAP functions (ROLLUP, CUBE, RANK) to extract and analyze hospital data.

Optimized relational database schema by applying normalization techniques, indexing, and constraints to improve query efficiency and data integrity

Developed triggers and stored procedures to automate hospital appointment scheduling, enforce business rules (doctor availability, valid time slots), and track historical changes through an audit log system.

Created user-defined functions (UDFs) to validate doctor-hospital relationships and determine the next available appointment slot, optimizing scheduling workflows and preventing conflicts.

Global Distributed Database Architecture for CampusEats (Fall 2024)

Scalable Data Warehousing & Analytics on Microsoft Azure (Fall 2024)

Designed and implemented a distributed database system for a globally expanding food delivery platform by applying horizontal and vertical fragmentation, ensuring efficient data allocation across North America, Europe, and Asia.

Optimized database scalability and availability by implementing data replication, partitioning strategies, and

Designed and implemented a distributed database system for a globally expanding food delivery platform by applying horizontal and vertical fragmentation, ensuring efficient data allocation across North America, Europe, and Asia.

Optimized database scalability and availability by implementing data replication, partitioning strategies, and Amazon RDS-based multi-region replication, reducing query latency and ensuring fault tolerance.

Scalable Data Warehousing & Analytics on Microsoft Azure (Fall 2024)

Scalable Data Warehousing & Analytics on Microsoft Azure (Fall 2024)

Developed a cloud-based pipeline using Azure Data Factory to extract, transform and load structured and semi-structured

data into Synapse Analytics. Integrated Blob Storage for retrieval of large datasets while optimizing storage costs.

Enhanced query performance and business intelligence by optimizing Azure Synapse SQL Pools with partition

Developed a cloud-based pipeline using Azure Data Factory to extract, transform and load structured and semi-structured

data into Synapse Analytics. Integrated Blob Storage for retrieval of large datasets while optimizing storage costs.

Enhanced query performance and business intelligence by optimizing Azure Synapse SQL Pools with partitioning, indexing

and materialized views, reducing execution time by 40%. Integrated Tableau for real-time analytics, enabling advanced reporting and data-driven decision-making.

Transforming Dining Services: An AI-Based Information System for Food Waste Management (Spring 2024)

Transforming Dining Services: An AI-Based Information System for Food Waste Management (Spring 2024)

Transforming Dining Services: An AI-Based Information System for Food Waste Management (Spring 2024)

Worked with different teams, including business leaders and product owners, to gather and understand requirements for the AI-Powered Food Inventory Management System. Created clear user stories and acceptance criteria that aimed to reduce food waste from 18% to 10%, potentially saving Boston University approximately $1.3 million annually.

Worked with different teams, including business leaders and product owners, to gather and understand requirements for the AI-Powered Food Inventory Management System. Created clear user stories and acceptance criteria that aimed to reduce food waste from 18% to 10%, potentially saving Boston University approximately $1.3 million annually. Coordinated closely with the development and quality assurance teams during project meetings—like planning sessions and daily check-ins—using Azure DevOps to manage tasks and track progress, while helping to improve software delivery processes, contributing to a projected payback period of 3.9 years for the project.

Database Performance Tuning and Data Mart for Taxi Analytics (Spring 2024)

Transforming Dining Services: An AI-Based Information System for Food Waste Management (Spring 2024)

Transforming Dining Services: An AI-Based Information System for Food Waste Management (Spring 2024)

Implemented and automated data operations to Extract, Transform & Load (ETL) an unstructured large 4GB taxi database with 15 million rows of data after data cleaning and validating, into a staging table first and then structured relational database using DDL to eventually create a Data Mart (Dimensional Data) for Online Analytical Process

Implemented and automated data operations to Extract, Transform & Load (ETL) an unstructured large 4GB taxi database with 15 million rows of data after data cleaning and validating, into a staging table first and then structured relational database using DDL to eventually create a Data Mart (Dimensional Data) for Online Analytical Processing.

Achieved increase in query performance by applying PL/SQL and T-SQL to create partitions, stored procedures, window functions and CTE to substantially tune and reduce query processing time by 4 to 6 seconds.

Depressive Disorder Predictive Model Using a Comprehensive Data Mining Approach (Spring 2024)

Transforming Dining Services: An AI-Based Information System for Food Waste Management (Spring 2024)

Portfolio Optimization and Risk Management Using Market Volume and Dividend Yield Strategies (Spring 2024)

Employed Naïve Bayesian, Boruta, and CFS feature selection techniques on a large dataset of 300 dependent variables, post preprocessing to ensure and consistency of the data before applying 6 different machine learning classifiers in R. Applied hyper-parameter tuning to classifiers to achieve a True Positive Rate (TPR) exceeding 80% in pr

Employed Naïve Bayesian, Boruta, and CFS feature selection techniques on a large dataset of 300 dependent variables, post preprocessing to ensure and consistency of the data before applying 6 different machine learning classifiers in R. Applied hyper-parameter tuning to classifiers to achieve a True Positive Rate (TPR) exceeding 80% in predictive modeling, showcasing exceptional proficiency in handling imbalanced raw data within a comprehensive data mining framework.

Portfolio Optimization and Risk Management Using Market Volume and Dividend Yield Strategies (Spring 2024)

Portfolio Optimization and Risk Management Using Market Volume and Dividend Yield Strategies (Spring 2024)

Portfolio Optimization and Risk Management Using Market Volume and Dividend Yield Strategies (Spring 2024)

Developed and implemented methodologies for optimizing portfolios, using python, utilizing dividend yield and market volume weighting, which improved risk-adjusted returns by around 15% over a 5 year back testing period.Created a more precise forecasting model with a 20% decrease in portfolio variance by incorporating time series analysis

Developed and implemented methodologies for optimizing portfolios, using python, utilizing dividend yield and market volume weighting, which improved risk-adjusted returns by around 15% over a 5 year back testing period.Created a more precise forecasting model with a 20% decrease in portfolio variance by incorporating time series analysis for over 100 tickers`, optimizing portfolio rebalancing strategies and designing an extensive stock data analysis pipeline.

Book My Staycation Database Design (Fall 2023)

Portfolio Optimization and Risk Management Using Market Volume and Dividend Yield Strategies (Spring 2024)

Book My Staycation Database Design (Fall 2023)

This innovative database design project was tailored to streamline and enhance the user experience in booking hotels and stays. With a focus on user-friendly interfaces and efficient data management, our system aims to simplify the process of finding and reserving accommodations for a relaxing staycation. Through meticulous design and opt

This innovative database design project was tailored to streamline and enhance the user experience in booking hotels and stays. With a focus on user-friendly interfaces and efficient data management, our system aims to simplify the process of finding and reserving accommodations for a relaxing staycation. Through meticulous design and optimization, "BookMyStaycation" promises a seamless platform, empowering users to discover their ideal getaway destinations effortlessly.

The Game of Life (Fall 2023)

Portfolio Optimization and Risk Management Using Market Volume and Dividend Yield Strategies (Spring 2024)

Book My Staycation Database Design (Fall 2023)

I used Python to use the concepts I learned in the Information Structures course to develop this straightforward but engaging game. This game had an obstacle course tailored to the age group chosen, as well as three stages for three important age groups. The hardest part of this job was not using any libraries at all, even though I had do

I used Python to use the concepts I learned in the Information Structures course to develop this straightforward but engaging game. This game had an obstacle course tailored to the age group chosen, as well as three stages for three important age groups. The hardest part of this job was not using any libraries at all, even though I had done some quite advanced coding that included using machine learning. Everything had to be coded from scratch.

This website uses cookies.

We use cookies to analyze website traffic and optimize your website experience. By accepting our use of cookies, your data will be aggregated with all other user data.